- Harsh Maur

- November 24, 2024

- 7 Mins read

- Scraping

Automating Data Validation with Python Libraries

Validating web-scraped data ensures it's clean, accurate, and usable. Manual validation is slow and error-prone, especially with large datasets. Python libraries like Beautiful Soup, Scrapy, and Cerberus automate this process, saving time and effort. Here's a quick overview of what these libraries can do:

- Beautiful Soup: Parses messy HTML/XML to extract and validate data.

- Scrapy: Automates data cleaning and validation during scraping.

- Cerberus: Enforces custom data rules and schemas for structured datasets.

- Requests: Ensures reliable web responses.

- Selenium: Handles validation for dynamic, JavaScript-heavy websites.

Key Validation Steps:

- Define Data Schemas: Set rules for required fields, formats, and ranges (e.g., prices > 0, valid emails).

- Check Data Types/Formats: Ensure consistency (e.g., dates, numbers, URLs).

- Validate Ranges/Boundaries: Catch anomalies like negative prices or invalid percentages.

For large-scale or complex projects, managed services like Web Scraping HQ can streamline validation with customizable rules and compliance checks.

Quick Comparison:

| Library | Best For | Example Use Case |

|---|---|---|

| Beautiful Soup | Parsing HTML/XML | Extracting product data |

| Scrapy | Automated pipeline checks | Large-scale scraping |

| Cerberus | Schema-based validation | Enforcing data rules |

| Requests | Verifying web responses | Checking HTTP status |

| Selenium | Handling dynamic content | Scraping JavaScript sites |

Start with a tool like Beautiful Soup for simple tasks, or scale up with Scrapy and Cerberus for more complex needs. Ready to automate? Choose a library and begin cleaning your data today!

Related video from YouTube

Python Libraries for Automating Data Validation

Let's look at the top Python libraries that help you check and clean your data automatically.

Using Beautiful Soup for Parsing and Validation

Beautiful Soup makes it easy to work with messy HTML and XML. Think of it as your data detective - it breaks down web pages into a simple tree structure so you can check things like prices or product descriptions before pulling them out. It's like having a quality control checkpoint for your web data.

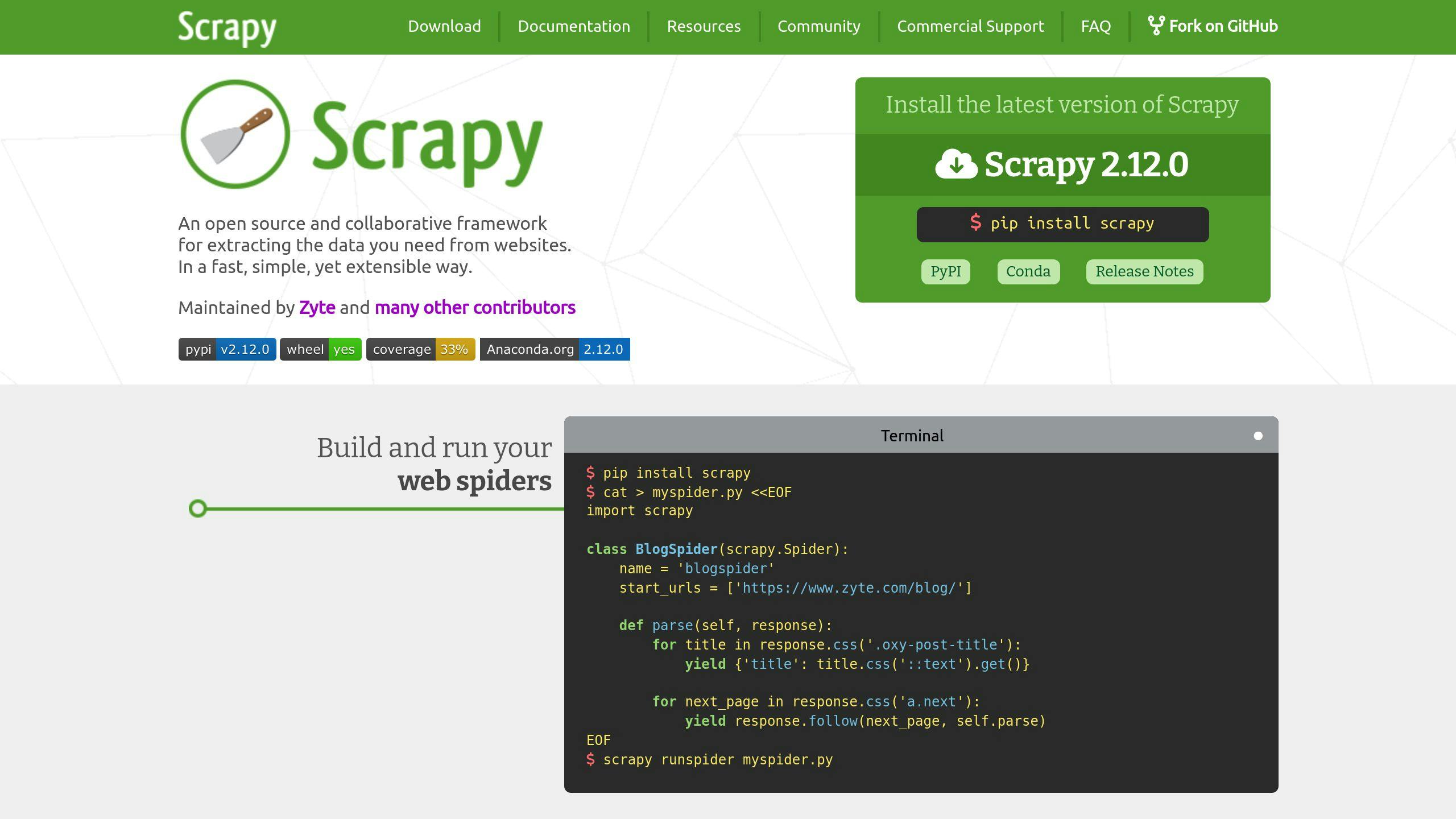

Scrapy's Built-In Validation Tools

Scrapy comes with its validation system right out of the box. It's like having an assembly line for your data - as information comes in, Scrapy automatically cleans it up and checks if everything's correct. Using Scrapy's Item and Field classes, you can set up rules about what data you need, what type it should be, and any special checks you want to run.

Validating Data Models with Cerberus

Cerberus is your data bouncer - it makes sure everything follows the rules you set up. Want to check if numbers fall within certain ranges? Need to verify complex nested data? Cerberus handles it all. It's particularly good at managing data that has lots of interconnected parts.

Other Helpful Libraries: Requests and Selenium

Requests and Selenium round out your data-checking toolkit. Requests make sure you're getting good responses from websites, while Selenium helps check data on sites heavy with JavaScript. It's like having both a security guard at the door (Requests) and someone inside making sure everything runs smoothly (Selenium).

| Library | What It Does Best | Perfect For |

|---|---|---|

| Beautiful Soup | Checks HTML/XML structure | Regular websites |

| Scrapy | Handles data pipeline checks | Big data projects |

| Cerberus | Enforces data rules | Complex data structures |

| Requests | Verifies web responses | Basic web scraping |

| Selenium | Checks dynamic content | Modern web apps |

These tools give you everything you need to build solid data-checking systems that fit your specific needs.

Steps to Automate Data Validation

Defining and Enforcing Data Schemas

Think of data schemas as the building blocks of your validation process - they're like a quality control checklist for your data. Here's what a basic schema looks like:

| Data Field | Validation Rules | Example |

|---|---|---|

| Product Name | Required, String, Min Length 3 | "Wireless Headphones" |

| Price | Required, Float, Range 0-10000 | 149.99 |

| SKU | Required, Alphanumeric, Length 8-12 | "PRD12345678" |

| Stock | Integer, Min 0 | 250 |

Using tools like Cerberus, you can set up these rules to catch data issues before they cause problems. What makes Cerberus stand out? It handles nested data structures like a pro - perfect for when your data gets complex. You can even set up rules that change based on different situations, kind of like having a smart filter that knows when to adjust its settings.

Checking Data Types and Formats

Let's talk about keeping your data clean and consistent. Python makes this easier with tools like Beautiful Soup for HTML checking and Scrapy for data processing. These tools help you:

- Turn text prices into actual numbers

- Make sure dates follow the same format

- Check if emails, phone numbers, and URLs are real

Validating Data Ranges and Boundaries

Setting boundaries helps you spot weird data before it messes up your system. Scrapy's tools are great for this - they check your data as it comes in. Here's what you'll want to watch for:

- Prices (they shouldn't be negative or crazy high)

- Stock levels (can't have -10 items in stock!)

- Percentages (nothing over 100%)

- Dates (no orders from the year 1800)

- Text length (product descriptions shouldn't be novels)

sbb-itb-65bdb53

Tips for Effective Data Validation

Using Well-Known Libraries

Let's talk about Python data validation - why reinvent the wheel when battle-tested libraries exist?

Beautiful Soup makes HTML parsing a breeze, pulling out structured data with minimal fuss. Scrapy brings its validation toolkit that's ready to use for web scraping projects.

Want schema validation without the headache? Cerberus does the heavy lifting with its straightforward dictionary-based approach:

schema = {

'product_name': {'type': 'string', 'required': True, 'minlength': 3},

'price': {'type': 'float', 'required': True, 'min': 0},

'stock': {'type': 'integer', 'min': 0},

'last_updated': {'type': 'datetime'}

}

Handling Errors During Validation

Let's face it - errors happen. What matters is how you deal with them. Set up a solid error management system and use logging to track what's going wrong and why.

Here's what to do with different error types:

| Error Type | Response |

|---|---|

| Missing Fields | Log error, skip record, flag for review |

| Invalid Format | Attempt auto-correction, store original and corrected values |

| Out of Range | Apply boundary limits, cap at max/min allowed values |

Monitoring and Updating Validation Systems

Your data's always changing - your validation rules should too. Keep an eye on things with these key metrics:

- How many records fail validation

- Which errors pop up most often

- How long it takes to process each record

- How many values get auto-corrected

Set up alerts for when validation failures spike above normal levels. And don't forget to check your validation rules every few months - what worked last quarter might not cut it today.

Using Managed Validation Services

Not everyone has the time or team to build data validation systems from scratch. That's where managed web scraping services come in - they handle the heavy lifting while you focus on using the data.

What Web Scraping HQ Offers

Web Scraping HQ takes care of your entire data pipeline, from gathering to quality checks. Their platform doesn't just collect data - it makes sure what you get is clean, accurate, and follows the rules.

Here's what their validation system includes:

| Validation Layer | Features |

|---|---|

| Primary Check | Schema validation, industry rules |

| Quality Assurance | Two-step validation, auto error fixes |

| Compliance | Legal checks, privacy standards |

| Output Control | JSON/CSV formatting, custom schemas |

Features of Web Scraping HQ

The platform goes beyond basic checks. Here's what sets it apart:

- Data schemas you can customize for your industry

- Smart rules that adjust to data changes

- Double-check the system for better accuracy

- Options to manage your crawls

- Quick help when validation issues pop up

"Managed services combine compliance with data quality, offering businesses reliable validation solutions."

When Managed Services Are a Good Fit

Think of managed services as your data validation team-for-hire. They're perfect if you:

- Don't have data engineers on staff

- Need to scale up quickly

- Must follow strict industry rules

These services work great alongside Python libraries, giving you the best of both worlds - the flexibility of code and the peace of mind of expert support.

Conclusion: Automating Data Validation with Python

Let's break down how to make data validation work for your web scraping projects. Python makes this job easier with tools like Beautiful Soup, Scrapy, and Cerberus.

Think of these tools as your quality control team:

- Beautiful Soup checks if your HTML makes sense

- Cerberus makes sure your data follows the rules

- Scrapy keeps an eye on everything automatically

Here's what each tool does best:

| Tool | What It Does | When to Use It |

|---|---|---|

| Beautiful Soup | Checks HTML structure | When you need basic web page checks |

| Cerberus | Enforces data rules | When you need strict data formats |

| Scrapy | Handles complex checks | When you're working at scale |

Ready to start? Pick one tool and master it. Beautiful Soup is perfect for beginners - it's like training wheels for data validation. As you scrape more data, you'll need better ways to keep it clean and organized.

Don't want to deal with the technical stuff? Web Scraping HQ offers ready-to-use validation systems. They handle the heavy lifting while you focus on what matters: analyzing your data.

FAQs

Find answers to commonly asked questions about our Data as a Service solutions, ensuring clarity and understanding of our offerings.

We offer versatile delivery options including FTP, SFTP, AWS S3, Google Cloud Storage, email, Dropbox, and Google Drive. We accommodate data formats such as CSV, JSON, JSONLines, and XML, and are open to custom delivery or format discussions to align with your project needs.

We are equipped to extract a diverse range of data from any website, while strictly adhering to legal and ethical guidelines, including compliance with Terms and Conditions, privacy, and copyright laws. Our expert teams assess legal implications and ensure best practices in web scraping for each project.

Upon receiving your project request, our solution architects promptly engage in a discovery call to comprehend your specific needs, discussing the scope, scale, data transformation, and integrations required. A tailored solution is proposed post a thorough understanding, ensuring optimal results.

Yes, You can use AI to scrape websites. Webscraping HQ’s AI website technology can handle large amounts of data extraction and collection needs. Our AI scraping API allows user to scrape up to 50000 pages one by one.

We offer inclusive support addressing coverage issues, missed deliveries, and minor site modifications, with additional support available for significant changes necessitating comprehensive spider restructuring.

Absolutely, we offer service testing with sample data from previously scraped sources. For new sources, sample data is shared post-purchase, after the commencement of development.

We provide end-to-end solutions for web content extraction, delivering structured and accurate data efficiently. For those preferring a hands-on approach, we offer user-friendly tools for self-service data extraction.

Yes, Web scraping is detectable. One of the best ways to identify web scrapers is by examining their IP address and tracking how it's behaving.

Data extraction is crucial for leveraging the wealth of information on the web, enabling businesses to gain insights, monitor market trends, assess brand health, and maintain a competitive edge. It is invaluable in diverse applications including research, news monitoring, and contract tracking.

In retail and e-commerce, data extraction is instrumental for competitor price monitoring, allowing for automated, accurate, and efficient tracking of product prices across various platforms, aiding in strategic planning and decision-making.