- Harsh Maur

- May 19, 2025

- 8 Mins read

- WebScraping

Best Methods to Scrape eBay listings?

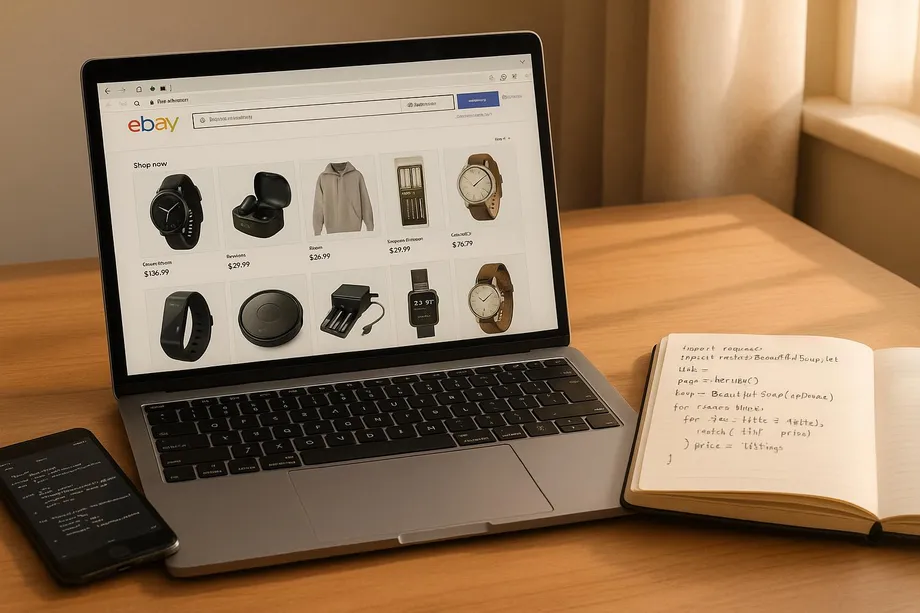

Scraping eBay listings can help businesses analyze market trends, set competitive prices, and track inventory. The best methods include using the eBay API, Python-based solutions, browser automation, or professional services. Each approach has its strengths, depending on your technical skills and project scale.

Key Takeaways:

- eBay API: Safest and most compliant method for structured data.

- Python-based scraping: Flexible, ideal for customization (requires coding skills).

- Browser automation: Great for dynamic content (JavaScript-heavy pages).

- Professional services: Best for large-scale, managed solutions.

Quick Comparison:

| Method | Best For | Key Benefit |

|---|---|---|

| eBay API | Legal Compliance | Reliable, structured data access |

| Python-based Solutions | Customization | Flexible and adaptable |

| Browser Automation | Complex Data | Simulates human behavior |

| Professional Services | Large-scale projects | Managed, scalable solutions |

Legal Tips:

- Follow eBay’s terms of service.

- Avoid scraping private data.

- Use rate limiting and proxies to prevent detection.

Choose the method that fits your needs while staying within legal boundaries. For detailed setups and tools (like httpx, BeautifulSoup4, or Selenium), check the full article.

Setup Requirements

Before diving into scraping eBay listings, it's important to set up the right tools and follow proper guidelines. With eBay generating nearly $74 billion in gross merchandise volume in 2022, the platform offers a wealth of market insights for businesses and researchers alike.

Required Tools

To scrape eBay listings effectively, you'll need a solid toolkit of Python libraries and related resources. Here's a breakdown:

| Tool Category | Essential Components | Purpose |

|---|---|---|

| Core Libraries | Python 3.x, httpx, BeautifulSoup4 | Basic scraping functionality |

| Parsing Tools | parsel, lxml | Processing HTML and extracting data |

| Data Handling | nested-lookup, ObjectsToCsv | Organizing and exporting scraped data |

| Network Management | Proxy services, Request handlers | Managing access and avoiding restrictions |

These tools streamline the process of collecting data while ensuring smooth performance. For example, BeautifulSoup4 is excellent for navigating intricate HTML structures, and httpx offers modern HTTP capabilities to handle dynamic content on eBay. Once your toolkit is ready, it’s equally important to stay within legal boundaries.

Legal Guidelines

To scrape eBay listings requires careful adherence to legal and ethical standards. To avoid potential issues, review eBay's terms of service and consult legal experts if necessary. Here are some key principles to follow:

- Respect eBay's rate limits to avoid overloading their servers.

- Do not access or scrape private user data.

- Space out requests to maintain reasonable intervals.

- Adhere to eBay's API License Agreement when applicable.

By following these guidelines, you can ensure your scraping activities remain compliant and ethical.

Data Points to Extract

To make your efforts worthwhile, focus on gathering data that provides actionable insights. Research from NBER indicates that analyzing eBay sales and competitor data can increase weekly sales by an average of 3.6%. Here's what to prioritize:

| Category | Key Data Elements | Business Value |

|---|---|---|

| Product Details | Title, SKU, Condition | Helps with inventory analysis |

| Pricing Information | Current price, Previous price, Shipping costs | Aids in competitive pricing strategies |

| Seller Metrics | Feedback score, Response rate | Assesses market reputation |

| Transaction Data | Sales velocity, Best offer status | Provides insights into demand trends |

With the right tools, legal framework, and focus on critical data points, you’ll be well-equipped to extract meaningful insights from eBay listings.

Scraping Techniques

If you're looking to scrape eBay listings effectively, here are some proven methods to get the job done.

Python-Based Scraping

Python is a go-to tool for scraping, thanks to its powerful libraries like httpx and parsel. Here's how you can set up a Python-based scraper:

| Component | Configuration | Purpose |

|---|---|---|

| HTTP Client | httpx[http2] with browser headers |

Manages requests and handles redirects |

| Parser | parsel or BeautifulSoup4 |

Extracts data from HTML content |

| Data Handler | nested_lookup |

Handles complex data extraction requirements |

To make your scraping more efficient, focus on using eBay's URL parameters. For example:

-

_nkw: Targets specific keywords. -

_sacat: Filters by category. -

_pgn: Handles pagination for multiple pages.

If you encounter content heavily reliant on JavaScript, you'll need to shift to browser automation for better results.

Browser Automation

When dealing with dynamic content and JavaScript-heavy pages, browser automation is your best bet. Here’s how to approach it:

- Selenium Stealth Implementation: Adjust browser settings to mimic real user interactions, helping you avoid detection.

- Proxy Integration: Use IP rotation with randomized intervals to maintain access and prevent blocks.

- Behavioral Patterns: Simulate natural user behavior by incorporating random scrolling, pauses, and varied interaction speeds.

These strategies require careful configuration and must follow ethical and legal guidelines to ensure compliance with eBay's terms of service. Browser automation is especially useful for mimicking human actions on complex pages.

Professional Data Services

When technical solutions fall short, professional scraping services can step in to handle large-scale or highly specific data extraction needs. For example, Web Scraping HQ offers tailored eBay data extraction with features like:

| Feature | Benefit | Implementation |

|---|---|---|

| Automated QA | Ensures data accuracy and completeness | Double-layer quality verification |

| Custom Schema | Matches your business needs | Tailored data structure |

| Legal Compliance | Adheres to eBay’s terms | Built-in monitoring |

| Scalable Infrastructure | Handles high-volume data requests | Enterprise-grade architecture |

Their Standard plan ($449/month) delivers structured data in JSON or CSV formats. For more advanced needs, the Custom plan (starting at $999/month) includes self-managed crawling and priority support.

With billions of listings available on eBay, choosing the right scraping approach depends on your technical expertise and project goals. Whether you prefer coding with Python, automating with browser tools, or relying on professional services, there’s a method to match your needs.

Data Management

Data Quality Checks

Accurate data is the backbone of any meaningful analysis, especially when dealing with scraped eBay listings. To ensure reliability, a thorough validation framework is essential. Here's a breakdown of key validation types:

| Validation Type | Implementation | Purpose |

|---|---|---|

| Format Checks | Currency (e.g., $XX.XX), Dates (MM/DD/YYYY) | Ensures compliance with U.S. formatting standards |

| Range Validation | Price limits, listing dates | Identifies outliers and errors |

| Completeness | Presence of required fields | Upholds data integrity |

| Deduplication | Checking unique listing IDs | Avoids duplicate entries |

For price validation, consider these factors:

- Base price in USD

- Shipping costs

- Tax calculations

- "Best Offer" options

- Multi-quantity listings

Once the data passes these quality checks, the next step is selecting the right storage solution to manage it effectively.

Storage Solutions

Validated data needs to be stored in a way that supports long-term analysis and scalability. The storage format you choose should align with both the volume of data and how you plan to use it. Here's a quick guide:

| Storage Type | Best For |

|---|---|

| CSV Files | Small datasets and simple exports |

| JSON Format | Complex, hierarchical data |

| SQL Database | Structured queries and scalable storage |

| Cloud Storage | Large-scale, enterprise-level operations |

When implementing storage solutions, keep these points in mind:

- Automated Pipelines: Use checkpointing to capture, validate, and standardize data at every stage.

- Error Tracking: Log validation errors, storage issues, and failures for better troubleshooting.

To streamline queries and simplify upkeep, consider segmenting your data by category, update frequency, or region. This approach not only boosts efficiency but also makes maintenance far easier in the long run.

sbb-itb-65bdb53

Legal Compliance

When scraping eBay listings, staying within legal boundaries is just as important as the technical aspects. Adhering to eBay's terms of service is crucial to avoid penalties such as account suspension, bans, or even legal consequences.

Access Management

Responsible access management is key to ensuring your data collection doesn't disrupt eBay's servers. Here are some best practices to follow:

- Implement rate limiting to control the number of requests sent within a specific timeframe.

- Rotate IP addresses or use proxies to simulate natural browsing patterns.

- Use realistic, browser-like request headers to avoid detection.

- Optimize session durations to prevent excessive server load.

These practices not only help safeguard eBay's infrastructure but also contribute to a smoother and more predictable data collection process. While accessing publicly available data isn't inherently illegal, cases show that aggressive scraping, especially if it impacts server performance, can lead to enforcement actions.

API vs. Scraping Options

When it comes to legally extracting data, eBay's official API is the recommended route. It provides structured access to data, operates within clear rate limits, and aligns with eBay's policies.

Here's a quick comparison of using the eBay API versus web scraping:

| Feature | eBay API | Web Scraping |

|---|---|---|

| Legal Status | Fully supported and compliant with policies | Violates eBay's terms of service |

| Rate Limits | Defined: 25–500 feeds per 24 hours, depending on feed type | Unpredictable; risks detection |

| Data Quality | Standardized, reliable format | Inconsistent and less reliable |

| File Size Limits | 15 MB maximum for XML data | No specific size limits |

The API is designed to offer specific data feeds with clear rate limits. For instance, the ActiveInventoryReport feed allows 25 requests per day, while the AddFixedPriceItem feed permits up to 500 requests daily. These structured limits ensure stable platform performance and reliable data access.

When deciding between the API and scraping, keep these factors in mind:

- Data Needs: The API provides consistent, structured data tailored for inventory and transaction management, ensuring accuracy and reliability.

- Scalability: API usage is governed by clear limits, like 300 daily requests for OrderReport feeds or 400 for ReviseInventoryStatus feeds, making it a dependable option for scaling.

- Legal Assurance: Using the API ensures compliance with eBay's policies, offering legal protection. The 2024 U.S. Federal court ruling in the Bright Data vs. Meta case emphasized the risks of unauthorized access to login-protected data.

Summary

Scraping eBay listings involves finding the right balance between technical know-how and staying within legal boundaries. The three main methods - Python-based scraping, browser automation tools, and professional data services - each cater to different needs and project sizes.

Python-based scraping is a budget-friendly option for smaller projects. However, it demands coding skills and careful execution to avoid detection. Browser automation tools like Puppeteer and BrowserQL offer more advanced solutions, making them ideal for handling dynamic content and navigating anti-bot measures.

For large-scale data extraction, professional data services stand out as the most dependable choice. These services tackle complex tasks like proxy rotation and CAPTCHA solving, while delivering clean, structured data in formats like JSON or CSV.

To ensure successful eBay scraping, it's essential to focus on quality control, efficient storage, and legal compliance. A solid data governance framework can help maintain the accuracy and reliability of your data while supporting your goals.

Here are some key practices for sustainable scraping:

- Use rate limiting and proxy rotation to avoid detection

- Organize data with structured storage systems for easy access

- Regularly validate data to maintain quality

- Adhere to eBay's terms of service and legal requirements

- Continuously update and refine scraping methods

FAQs

Find answers to commonly asked questions about our Data as a Service solutions, ensuring clarity and understanding of our offerings.

We offer versatile delivery options including FTP, SFTP, AWS S3, Google Cloud Storage, email, Dropbox, and Google Drive. We accommodate data formats such as CSV, JSON, JSONLines, and XML, and are open to custom delivery or format discussions to align with your project needs.

We are equipped to extract a diverse range of data from any website, while strictly adhering to legal and ethical guidelines, including compliance with Terms and Conditions, privacy, and copyright laws. Our expert teams assess legal implications and ensure best practices in web scraping for each project.

Upon receiving your project request, our solution architects promptly engage in a discovery call to comprehend your specific needs, discussing the scope, scale, data transformation, and integrations required. A tailored solution is proposed post a thorough understanding, ensuring optimal results.

Yes, You can use AI to scrape websites. Webscraping HQ’s AI website technology can handle large amounts of data extraction and collection needs. Our AI scraping API allows user to scrape up to 50000 pages one by one.

We offer inclusive support addressing coverage issues, missed deliveries, and minor site modifications, with additional support available for significant changes necessitating comprehensive spider restructuring.

Absolutely, we offer service testing with sample data from previously scraped sources. For new sources, sample data is shared post-purchase, after the commencement of development.

We provide end-to-end solutions for web content extraction, delivering structured and accurate data efficiently. For those preferring a hands-on approach, we offer user-friendly tools for self-service data extraction.

Yes, Web scraping is detectable. One of the best ways to identify web scrapers is by examining their IP address and tracking how it's behaving.

Data extraction is crucial for leveraging the wealth of information on the web, enabling businesses to gain insights, monitor market trends, assess brand health, and maintain a competitive edge. It is invaluable in diverse applications including research, news monitoring, and contract tracking.

In retail and e-commerce, data extraction is instrumental for competitor price monitoring, allowing for automated, accurate, and efficient tracking of product prices across various platforms, aiding in strategic planning and decision-making.