- Harsh Maur

- October 18, 2025

- 9 Mins read

- WebScraping

How to Scrape Craigslist Phone Numbers?

Scraping phone numbers from Craigslist can be a technical challenge due to its anti-bot protections, but it’s possible with the right tools and a clear understanding of legal boundaries. Here’s a quick summary:

- Legal Considerations: Craigslist prohibits automated data collection in its Terms of Service. Scraping phone numbers is also subject to U.S. privacy laws. Always review these rules and consult legal experts if needed.

- Tools Needed: Python libraries like Requests, BeautifulSoup, Selenium, and Playwright help extract data from Craigslist listings. For images, Tesseract OCR can identify text-based phone numbers.

-

Steps to Scrape:

- Collect listing URLs using Python scripts.

- Download and parse HTML content using libraries like BeautifulSoup or Playwright.

- Extract phone numbers with regex for text and OCR for images.

- Anti-Bot Measures: Craigslist uses rate limits, CAPTCHAs, and IP tracking. Techniques like rotating proxies, switching user agents, and adding delays between requests can help avoid detection.

- Managed Services: For large-scale projects, services like Web Scraping HQ offer ready-to-use solutions with compliance and quality checks.

Scraping requires technical expertise and ethical considerations. Always ensure compliance with Craigslist’s policies and privacy laws to avoid legal risks.

Legal and Ethical Rules for Craigslist Scraping

If you're considering scraping phone numbers from Craigslist, it's crucial to understand the legal and ethical boundaries first. Craigslist's terms explicitly limit automated data collection, and ignoring these rules can result in serious legal issues. Make sure to review their guidelines carefully - they lay the groundwork for any technical steps you might take later.

Craigslist Terms of Service and U.S. Privacy Laws

Start by thoroughly reading Craigslist's terms of service to identify any restrictions on automated tools or data scraping. Since phone numbers are considered personal information, their collection and use are also governed by U.S. privacy laws. If you're unclear about your legal responsibilities, it's wise to consult a legal expert to avoid potential pitfalls.

Responsible Use of Scraped Phone Numbers

If you decide to use scraped phone numbers, do so with care and integrity. Only use the data for legitimate purposes, ensure you have the necessary consent, and implement strong security measures to protect the information. Taking these steps will help you comply with legal standards and uphold ethical practices in managing the data you collect.

Setting Up Your Scraping Tools

Before diving into scraping, you’ll need to prepare your environment by installing Python, setting up a workspace, and configuring the necessary libraries.

Required Python Libraries and Tools

For this project, you’ll rely on Python libraries for tasks like making HTTP requests, parsing HTML, and automating browsers. Here’s a breakdown of the key tools:

- Requests: Handles HTTP requests to fetch webpage content from Craigslist.

- BeautifulSoup: Works alongside Requests to parse HTML, making it easier to navigate and extract specific elements from listings.

- lxml: Speeds up parsing and supports XPath expressions for precise element selection, making it ideal for handling large datasets.

- Selenium: Automates browser interactions, useful for scraping dynamic JavaScript-based content.

- Playwright: A faster alternative to Selenium for browser automation.

- Scrapy: A complete framework for managing workflows, handling multiple pages, and organizing data pipelines.

Here’s a quick comparison of these libraries:

| Library | Purpose | Ease of Use | Speed | JavaScript Support |

|---|---|---|---|---|

| Requests | HTTP requests | High | Fast | None |

| BeautifulSoup | HTML parsing | High | Fast | None |

| lxml | Fast parsing | Medium | Very fast | None |

| Selenium | Browser automation | Medium | Slow | Yes |

| Scrapy | Complete framework | High | Very fast | None |

For exporting data, libraries like pandas, pyarrow, and openpyxl are great for organizing and saving results into CSV or Excel files. If you’re using Selenium, webdriver-manager simplifies browser driver installation, saving you time and effort.

Creating a Python Environment and Installing Dependencies

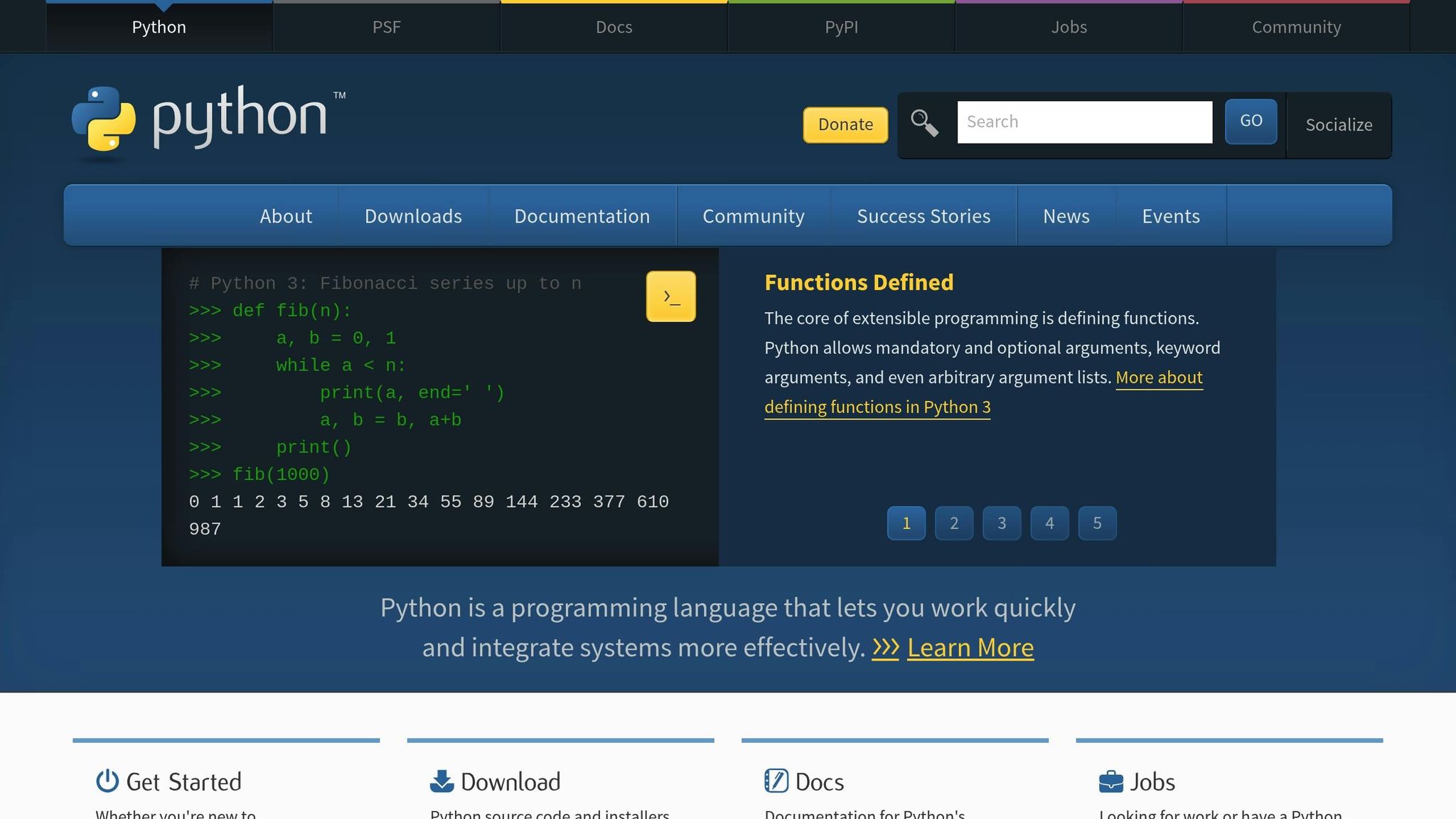

First, ensure Python 3.x is installed. You can download it from python.org/downloads. If you’re on Windows, don’t forget to check the “Add Python to PATH” option during installation. Alternatively, Anaconda offers an all-in-one solution with Python and pre-installed libraries for scientific computing.

Next, create a dedicated folder for your project - let’s call it craigslist-scraper. Open this folder in a code editor like Visual Studio Code or PyCharm, both of which provide excellent tools for Python development and debugging.

To keep your project’s dependencies organized, set up a virtual environment. Here’s how:

python3 -m venv myenv

Activate the virtual environment with one of the following commands:

-

Windows:

.\myenv\Scripts\activate -

Mac/Linux:

source myenv/bin/activate

In Visual Studio Code, you can link your virtual environment by pressing Ctrl+Shift+P, typing “Python: Select Interpreter,” and selecting the appropriate option.

Once your virtual environment is active, install the required libraries using pip:

-

For static pages:

pip install requests beautifulsoup4 -

For JavaScript-heavy pages:

pip install selenium -

For faster HTML parsing:

pip install lxml -

For exporting results:

pip install pandas pyarrow openpyxl

If you’re using Selenium, newer versions (4.6+) often manage browser drivers automatically. To simplify this further, install:

pip install webdriver-manager

For Playwright, install the package and configure browser binaries with:

pip install playwright

playwright install

With these tools and libraries in place, your environment is ready to handle Craigslist’s dynamic content and anti-scraping measures, setting the stage for extracting phone numbers effectively.

How to Extract Phone Numbers from Craigslist

Extracting phone numbers from Craigslist involves three main steps: identifying listings with phone numbers, downloading the page content, and using pattern matching and OCR to extract the numbers. Here’s how you can tackle each phase effectively.

Finding Listings with Phone Numbers

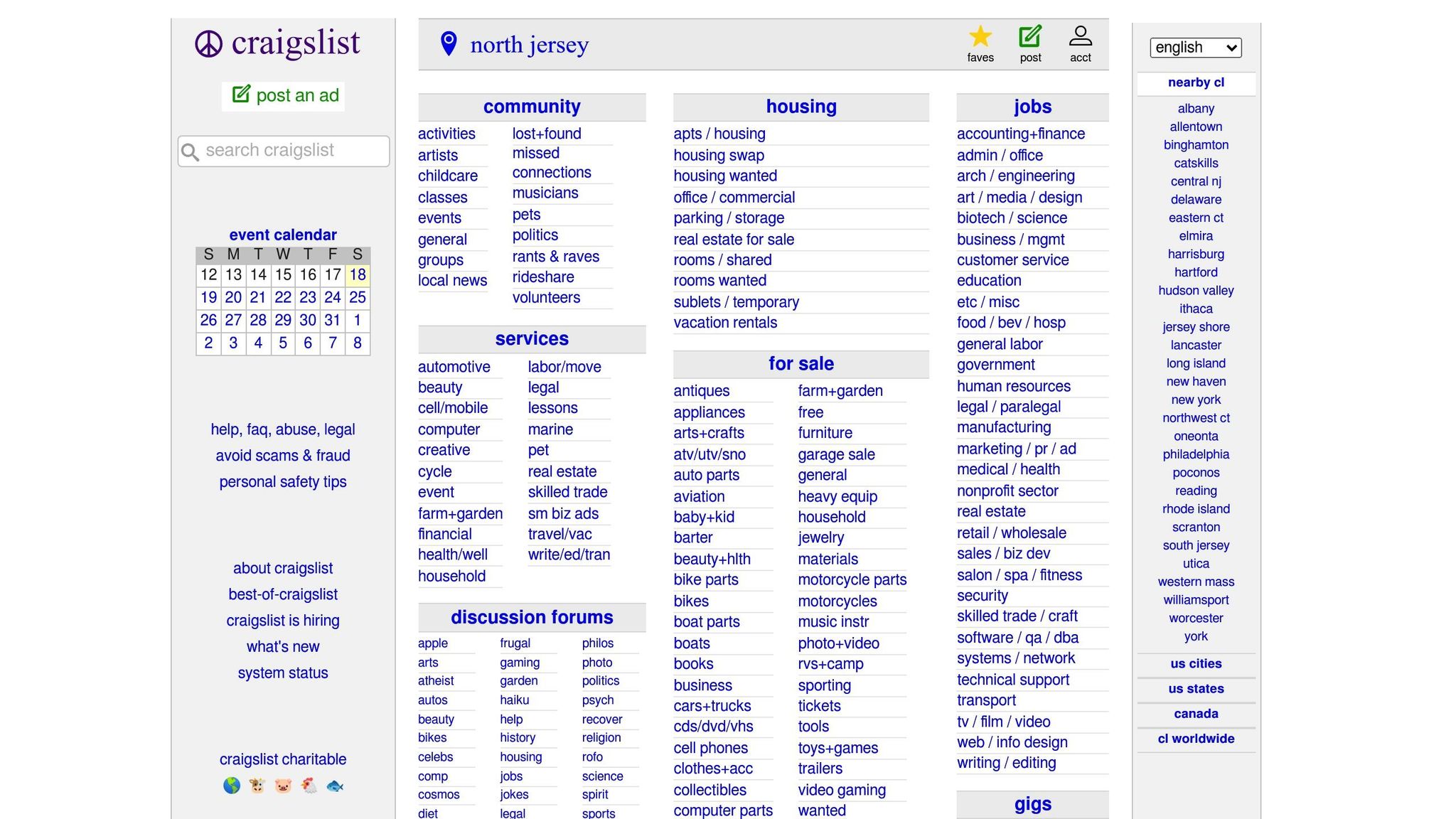

Sections like Services, For Sale, and Housing on Craigslist often include phone numbers since sellers and service providers want direct contact with potential customers.

Start by narrowing down your target area and category. Craigslist URLs follow a predictable structure: https://[city].craigslist.org/[category]/[listing-id].html. For example, Los Angeles car listings by owner use https://losangeles.craigslist.org/cto/.

To gather listing URLs systematically, begin with category pages displaying multiple listings. These pages contain links to individual posts, which you can extract using CSS selectors. Most listing links use the .result-title class and include the full URL.

Here’s an example script to collect URLs:

import requests

from bs4 import BeautifulSoup

import time

def get_listing_urls(category_url, max_pages=5):

listing_urls = []

for page in range(0, max_pages * 120, 120): # Each page shows 120 listings

page_url = f"{category_url}?s={page}"

response = requests.get(page_url)

soup = BeautifulSoup(response.content, 'html.parser')

# Extract listing URLs

links = soup.find_all('a', class_='result-title')

for link in links:

listing_urls.append(link['href'])

time.sleep(2) # Avoid overwhelming Craigslist servers

return listing_urls

Focus on recent posts (within the last 7 days) for better chances of finding active phone numbers.

Getting and Reading HTML Content

Once you have the URLs, the next step is downloading and parsing the HTML content. Craigslist pages are usually static HTML, but some phone numbers may be dynamically loaded.

For basic HTML extraction, the combination of requests and BeautifulSoup works well:

import requests

from bs4 import BeautifulSoup

def scrape_listing_content(url):

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36'

}

try:

response = requests.get(url, headers=headers)

response.raise_for_status()

soup = BeautifulSoup(response.content, 'html.parser')

content_div = soup.find('section', {'id': 'postingbody'})

title = soup.find('span', {'id': 'titletextonly'})

return {

'url': url,

'title': title.text.strip() if title else '',

'content': content_div.get_text() if content_div else '',

'html': str(content_div) if content_div else ''

}

except requests.RequestException as e:

print(f"Error fetching {url}: {e}")

return None

For listings with JavaScript-rendered content, Playwright is a better choice:

from playwright.sync_api import sync_playwright

def scrape_with_playwright(url):

with sync_playwright() as p:

browser = p.chromium.launch(headless=True)

page = browser.new_page()

page.set_extra_http_headers({

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36'

})

page.goto(url)

page.wait_for_load_state('networkidle')

content = page.locator('#postingbody').inner_text()

title = page.locator('#titletextonly').inner_text()

browser.close()

return {

'url': url,

'title': title,

'content': content

}

Always include delays between requests (2-3 seconds) to avoid being flagged by Craigslist. Once you’ve retrieved the HTML content, you’re ready to extract phone numbers.

Extracting Phone Numbers from Text and Images

Once you have the page content, use regex to locate phone numbers in the text. Craigslist phone numbers often appear in formats like (555) 123-4567, 555-123-4567, or 555.123.4567. Here’s a script for extracting them:

import re

def extract_phone_numbers(text):

phone_pattern = r'''

(?:\+?1[-.\s]?)? # Optional country code

(?:\(?([0-9]{3})\)?[-.\s]?) # Area code

([0-9]{3})[-.\s]? # First 3 digits

([0-9]{4}) # Last 4 digits

(?!\d) # Not followed by another digit

'''

phones = re.findall(phone_pattern, text, re.VERBOSE)

# Format numbers as (XXX) XXX-XXXX

cleaned_phones = []

for match in phones:

full_number = ''.join(match)

if len(full_number) == 10: # Validate US numbers

formatted = f"({full_number[:3]}) {full_number[3:6]}-{full_number[6:]}"

cleaned_phones.append(formatted)

return list(set(cleaned_phones)) # Remove duplicates

Some phone numbers are posted as images to prevent automated scraping. Use Tesseract OCR to extract text from images:

import pytesseract

from PIL import Image

import requests

from io import BytesIO

def extract_phone_from_images(soup):

phone_numbers = []

images = soup.find_all('img')

for img in images:

img_url = img.get('src')

if not img_url:

continue

try:

response = requests.get(img_url)

image = Image.open(BytesIO(response.content))

extracted_text = pytesseract.image_to_string(image)

phones = extract_phone_numbers(extracted_text)

phone_numbers.extend(phones)

except Exception:

continue

return phone_numbers

To install Tesseract:

- Windows: Download from GitHub and add it to your PATH.

-

Mac: Use

brew install tesseract. -

Ubuntu/Debian: Run

sudo apt-get install tesseract-ocr.

Finally, combine numbers from text and images:

def get_all_phone_numbers(listing_data):

text_phones = extract_phone_numbers(listing_data['content'])

soup = BeautifulSoup(listing_data['html'], 'html.parser')

image_phones = extract_phone_from_images(soup)

all_phones = text_phones + image_phones

return list(set(all_phones)) # Remove duplicates

sbb-itb-65bdb53

Bypassing Craigslist's Bot Protection

Craigslist has implemented several measures to prevent automated scraping on its platform. These defenses include IP rate limiting, CAPTCHA challenges, user-agent monitoring, and session tracking. Understanding how these mechanisms work is key to navigating them responsibly while adhering to ethical and legal standards.

Common Anti-Bot Measures on Craigslist

- IP Rate Limiting: Craigslist tracks the number of requests from each IP address and blocks those that exceed the allowed threshold.

- CAPTCHA Challenges: Automated behavior often triggers CAPTCHA challenges, requiring human verification to proceed.

- User-Agent Monitoring: Default user-agent strings, especially those commonly used by scraping tools, are easily flagged.

- Session Tracking: Unusual or excessively rapid navigation patterns are detected and can result in blocking.

Below are some methods to work around these defenses while maintaining ethical practices.

Methods to Avoid Getting Blocked

Rotating User Agents

Switching user agents frequently can help simulate requests coming from different browsers, making it harder for Craigslist to identify automated activity. Instead of using default Python user-agent strings, rotate through a list of up-to-date browser identifiers:

import random

user_agents = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36',

'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:109.0) Gecko/20100101 Firefox/121.0',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/17.2 Safari/605.1.15'

]

headers = {

'User-Agent': random.choice(user_agents),

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8',

'Accept-Language': 'en-US,en;q=0.5',

'Accept-Encoding': 'gzip, deflate',

'Connection': 'keep-alive',

'Upgrade-Insecure-Requests': '1'

}

Proxy Rotation

Rotating proxies can distribute your requests across different IP addresses, helping to avoid rate limits. Residential proxies are particularly effective as they resemble regular home internet connections:

import requests

import random

proxies_list = [

{'http': 'http://proxy1:port', 'https': 'https://proxy1:port'},

{'http': 'http://proxy2:port', 'https': 'https://proxy2:port'},

]

def make_request(url):

proxy = random.choice(proxies_list)

headers = {'User-Agent': random.choice(user_agents)}

try:

response = requests.get(url, headers=headers, proxies=proxy, timeout=10)

return response

except requests.RequestException:

return None

Why Choose Web Scraping HQ for Craigslist Data Extraction?

If you're working on a small project, a DIY approach to Craigslist scraping might do the trick. But when it comes to scaling up tasks like phone number extraction, things get a lot trickier. Managing proxies, solving CAPTCHAs, and keeping up with Craigslist's anti-bot measures require serious technical know-how and infrastructure. That’s where Web Scraping HQ steps in, offering a fully managed service that takes care of these complexities for you.

Web Scraping HQ has a proven track record in extracting data from real estate listings, with a focus on efficiently scraping phone numbers while overcoming Craigslist’s anti-bot defenses. Let’s break down how their features make these challenges easier to tackle.

DIY Scraping vs. Web Scraping HQ Managed Services

When comparing Web Scraping HQ to a DIY approach, the differences are clear. Building your own system requires significant time, money, and technical expertise. From developing proxy rotation systems to solving CAPTCHAs, maintaining data quality, and ensuring compliance, the DIY route can be a daunting and costly endeavor.

With Web Scraping HQ, you get a scalable, reliable infrastructure capable of handling large-scale extractions across multiple Craigslist regions or categories. No need to worry about performance bottlenecks or technical hurdles - they’ve got it covered.

FAQs

Get answers to frequently asked questions.

Yes, it is possible to scrape Craigslist using Webscraping HQ's scraping tools.

Here are the steps to get contact info on Craigslist. *Visit to webscraping HQ website *Login to web scraping API *Paste the url into API and wait for 2-3 minutes *You will get the scraped data.

Here are the steps to extract data from Craigslist. *Visit to webscraping HQ website *Login to web scraping API *Paste the url into API and wait for 2-3 minutes *You will get the scraped data.